|

Ming Jin |

About Me

I am currently an assistant professor at

School of Information and Communication Technology (ICT), Griffith University.

Prior to this, I obtained my Ph.D. degree in the Faculty of Information Technology at Monash University

in 2024.

I specialize in time series analytics and spatio-temporal data mining, with a good track record of

publishing high-impact papers in top-ranked venues, including NeurIPS, ICLR, ICML, KDD, and TPAMI, among

others.

My research outputs have been selected as Most Influential & Highly Cited Papers, with some having

become widely used baselines such as TimeLLM, gaining substantial recognition in the open-source

community.

I am a committee member of IEEE CIS Task Force on AI for Time Series and Spatio-Temporal Data.

I also serve as an associate editor for Neurocomputing (Q1 IF 6.5) and actively contribute as an

area chair or senior program committee member for prestigious AI and data mining conferences.

I am dedicated to conducting high-impact research and open for collaboration.

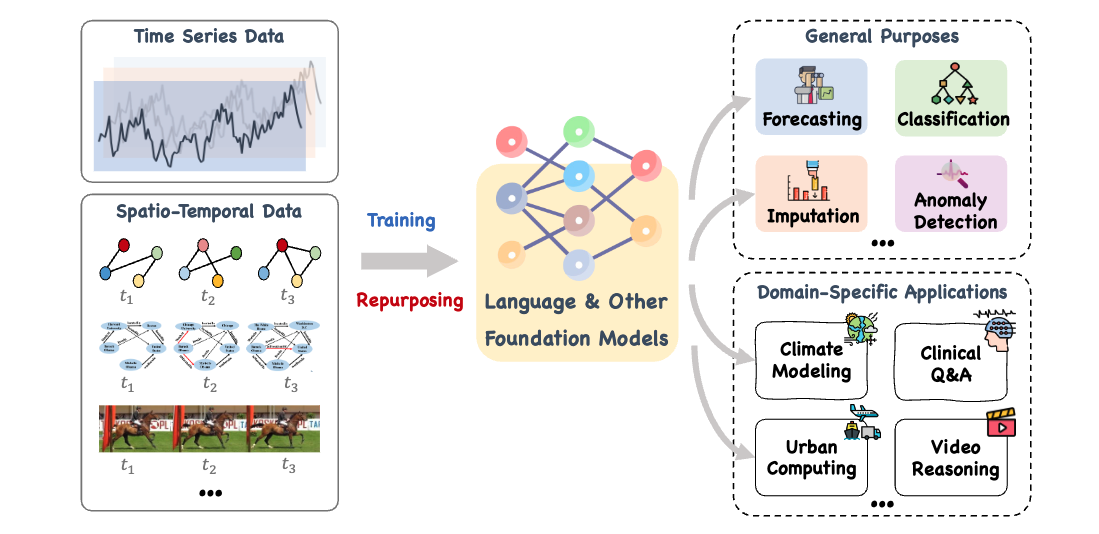

My research interests are in (1) time series intelligence, (2) spatio-temporal data mining, and (3)

multimodal learning

with a special focus on temporal settings (e.g., physical AI on time series and spatio-temporal data) in

solving both fundamental and real-world problems.

📌 Long-time Recruitment: PhD Students & Research Interns. I am

looking for (1)

well-matched (w.r.t.

research interests), (2) qualified, and (3) highly self-motivated candidates to work with me. If you are

interested, please fill this Google

Form and I will be in touch. Scholarships may be available in

2026/2027. I have no quota for 2026 CSC visiting PhDs but open for next year applications.

Due to the overwhelming volume of emails I receive daily, I kindly apologize in advance for not being

able to respond to each one individually.

Research Interests

I work in the field of time series and spatio-temporal data mining, primarily investigating the development and application of advanced machine learning techniques to understand complex patterns in temporal data. I focus on the following research topics:- Time Series Intelligence (e.g., pattern discovery, foundation/reasoning models, and next-gen time series AI systems)

- Spatio-temporal Data Mining (e.g., learning on evolving graph-structured data and AI for transportation/climate/energy science)

- Multimodal AI (e.g., agentic time series AI solutions for healthcare and other high-value applications)

- Trustworthy AI (e.g., time series AI safety including model transparency and human-in-the-loop deployment)

Recent News

- [02/2026] Honored to receive the Dean's Commendation for Research Excellence (2025)

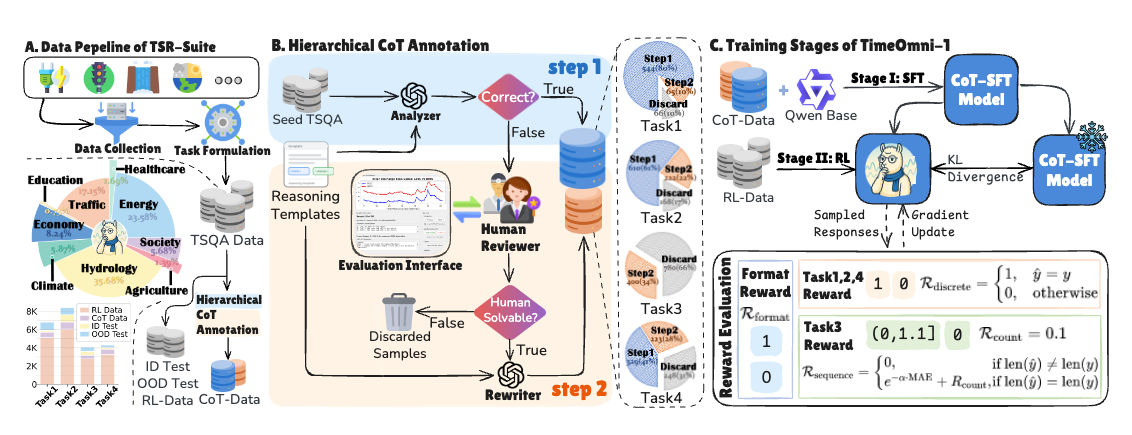

- [02/2026] Our paper TimeOmni-1 has been accepted by ICLR 2026 (CORE A*). Check our demo here.

- [01/2026] Honored to give a talk on "Time Series Foundation Models" at AAAI 2026 (CORE A*)

- [12/2025] Honored to be named among IEEE Computing's Top 30 Early Career Professionals (2025)

- [12/2025] We have secured WUN Research Development Fund (2025) on vehicle-to-grid optimization

- [12/2025] Our paper Source-free Time Series Domain Adaptation with Prior Evaluation of Model Salience has been accepted by IEEE Transactions on Neural Networks and Learning Systems

- [11/2025] Our paper FaST: Efficient and Effective Long-Horizon Forecasting for Large-Scale Spatial-Temporal Graphs via Mixture-of-Experts has been accepted by KDD 2026 (CORE A*)

- [11/2025] Our paper TimeDistill: Efficient Long-Term Time Series Forecasting with MLP via Cross-Architecture Distillation has been accepted by KDD 2026 (CORE A*)

- [11/2025] The 2nd International Workshop on Spatio-Temporal Data Mining from the Web has been accepted by WWW 2026

- [10/2025] A Survey on Diffusion Models for Time Series and Spatio-Temporal Data has been accepted by ACM Computing Survey

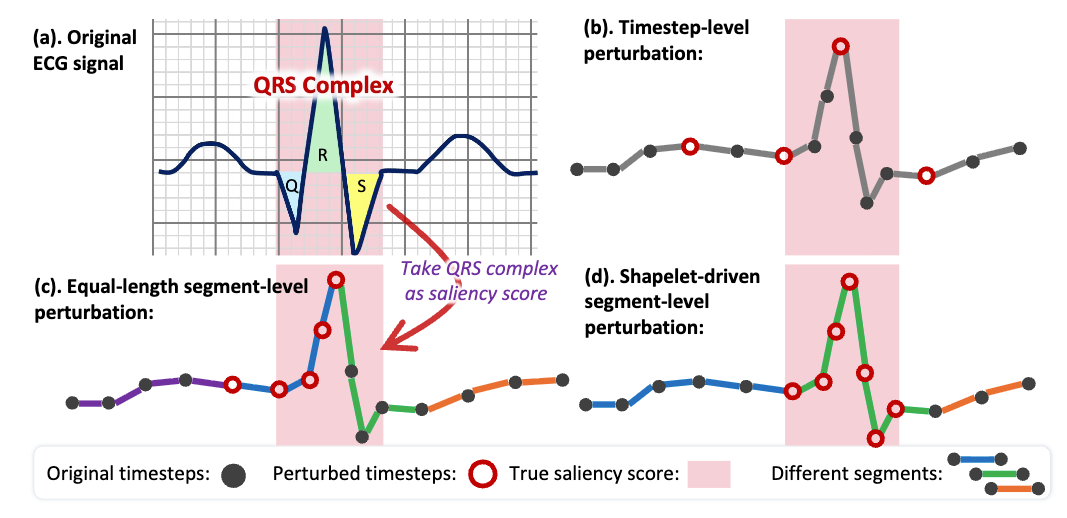

- [09/2025] Our paper ShapeX: Shapelet-Driven Post Hoc Explanations for Time Series Classification Models has been accepted by NeurIPS 2025 (CORE A*)

- [09/2025] Our benchmark paper of Self-Correction in LLMs has been accepted by NeurIPS 2025 (CORE A*)

- [09/2025] Our paper Multi-Scale Fine-tuning for Encoder-based Time Series Foundation Models has been accepted by NeurIPS 2025 (CORE A*)

- [09/2025] Our paper Continuous State Space Modeling on Dynamic Graphs has been accepted by NeurIPS 2025 (CORE A*)

- [09/2025] I have secured NVIDIA Academic Grant on developing next-gen time series analytical engines

- [09/2025] I will serve as an Area Chair for ICLR 2026 and ICASSP 2026

- [09/2025] Our tutorial on Foundation Models for Time Series Analysis has been accepted by AAAI 2026 (CORE A*)

- [08/2025] Our paper Test-time GNN Model Evaluation on Dynamic Graphs has been accepted by ICDM 2025 (CORE A*)

- [08/2025] Our applied research on salinity prediction using sparse drifter data has been accepted by CIKM 2025 (CORE A)

- [07/2025] Our applied research Large lithium-ion battery model for secure shared electric bike battery in smart cities has been accepted by Nature Communications (IF 15.7)

- [07/2025] Our applied research on supply chain forecasting with time series foundation model has been accepted by ITSC 2025

- [06/2025] Honored to serve on the Program Committee of IEEE CIS Task Force on AI for Time Series and Spatio-Temporal Data

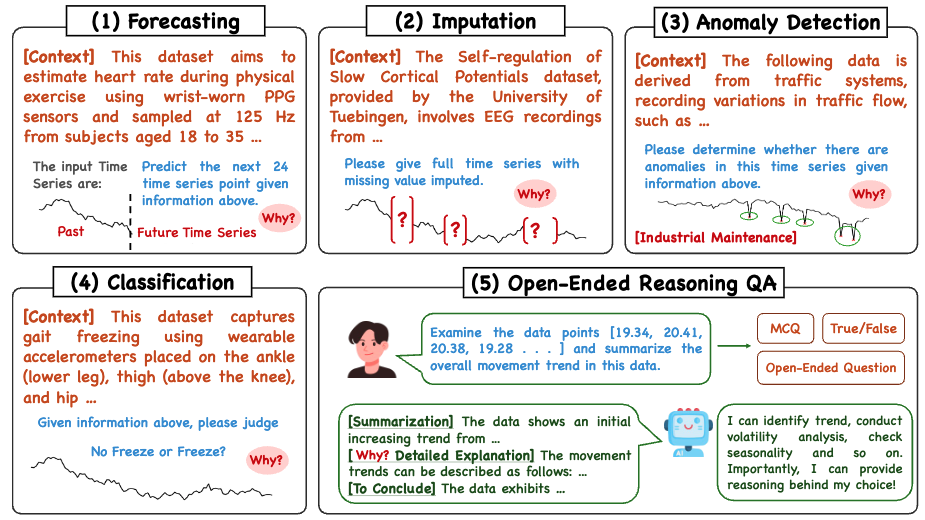

- [05/2025] Our paper Time-MQA: Time Series Multi-Task Question Answering with Context Enhancement has been accepted by ACL 2025 (CORE A*)

- [05/2025] Our paper Flow-based Reconcile Transformer for Hierarchical Time Series has been accepted by KDD 2025 (CORE A*)

- [05/2025] Our tutorial on Foundation Models for Spatio-Temporal Data Science has been accepted by KDD 2025 (CORE A*)

- [05/2025] Our paper Time-VLM: Exploring Multimodal Vision-Language Models for Augmented Time Series Forecasting has been accepted by ICML 2025 (CORE A*)

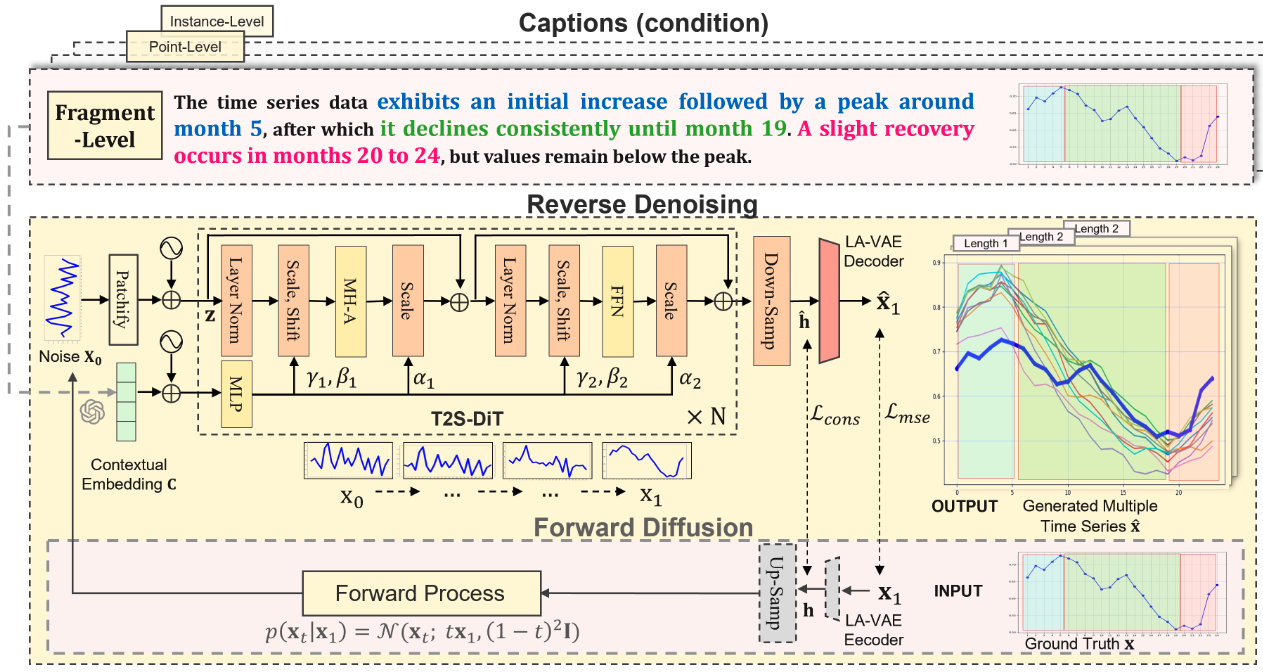

- [04/2025] Our paper T2S: High-resolution Time Series Generation with Text-to-Series Diffusion Models has been accepted by IJCAI 2025 (CORE A*)

- [04/2025] Honored to deliver a lecture-style tutorial on "Time Series Analysis in the Web" at WWW 2025 (CORE A*)

- [02/2025] Our paper Towards Expressive Spectral-Temporal Graph Neural Networks for Time Series Forecasting has been accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence (IF 20.8)

- [02/2025] Honored to deliver a lecture-style tutorial on "Time Series Foundation Models" at AAAI 2025 (CORE A*)

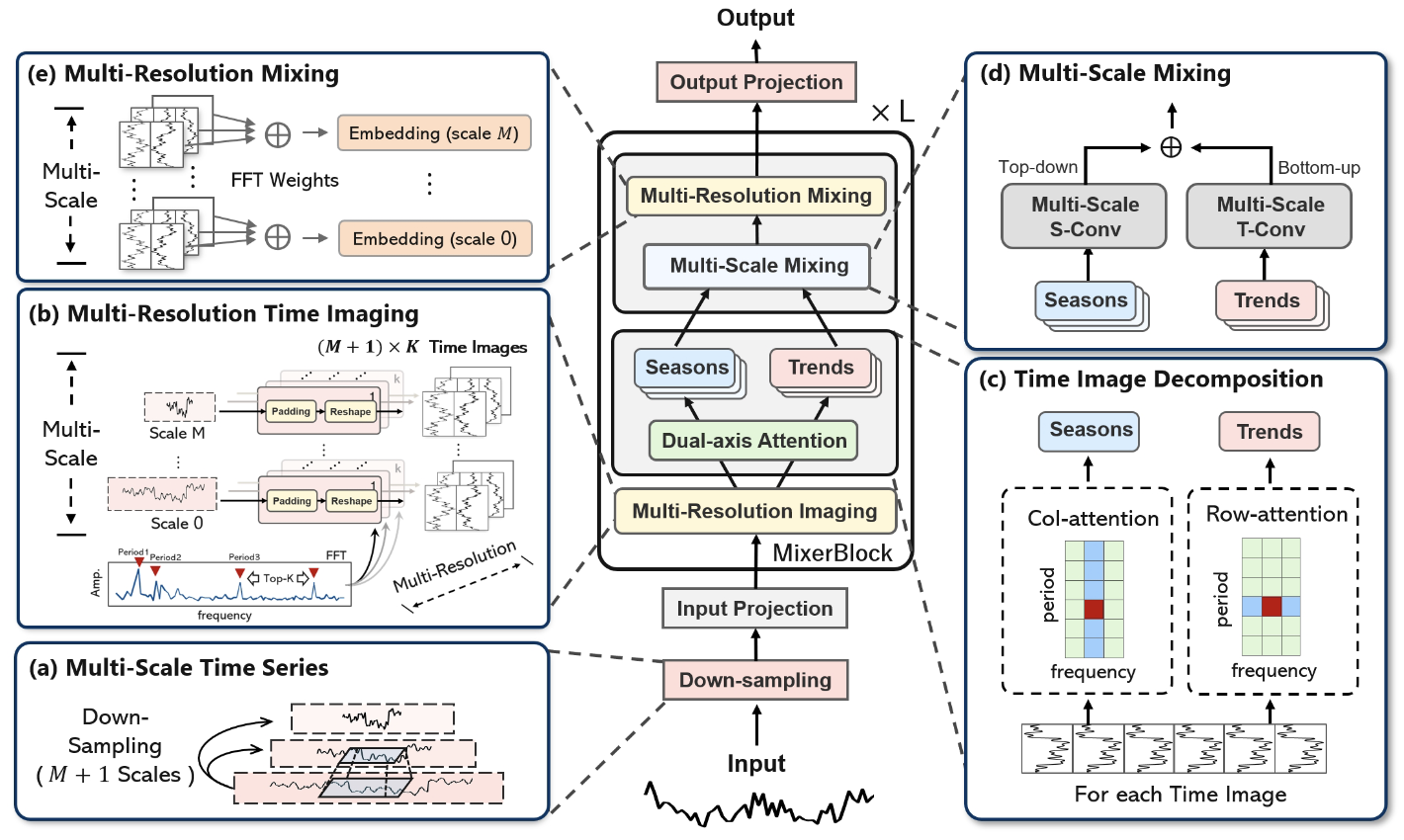

- [01/2025] Our paper TimeMixer++: A General Time Series Pattern Machine for Universal Predictive Analysis has been accepted by ICLR 2025 (CORE A*) as an Oral (Top 1.8%)

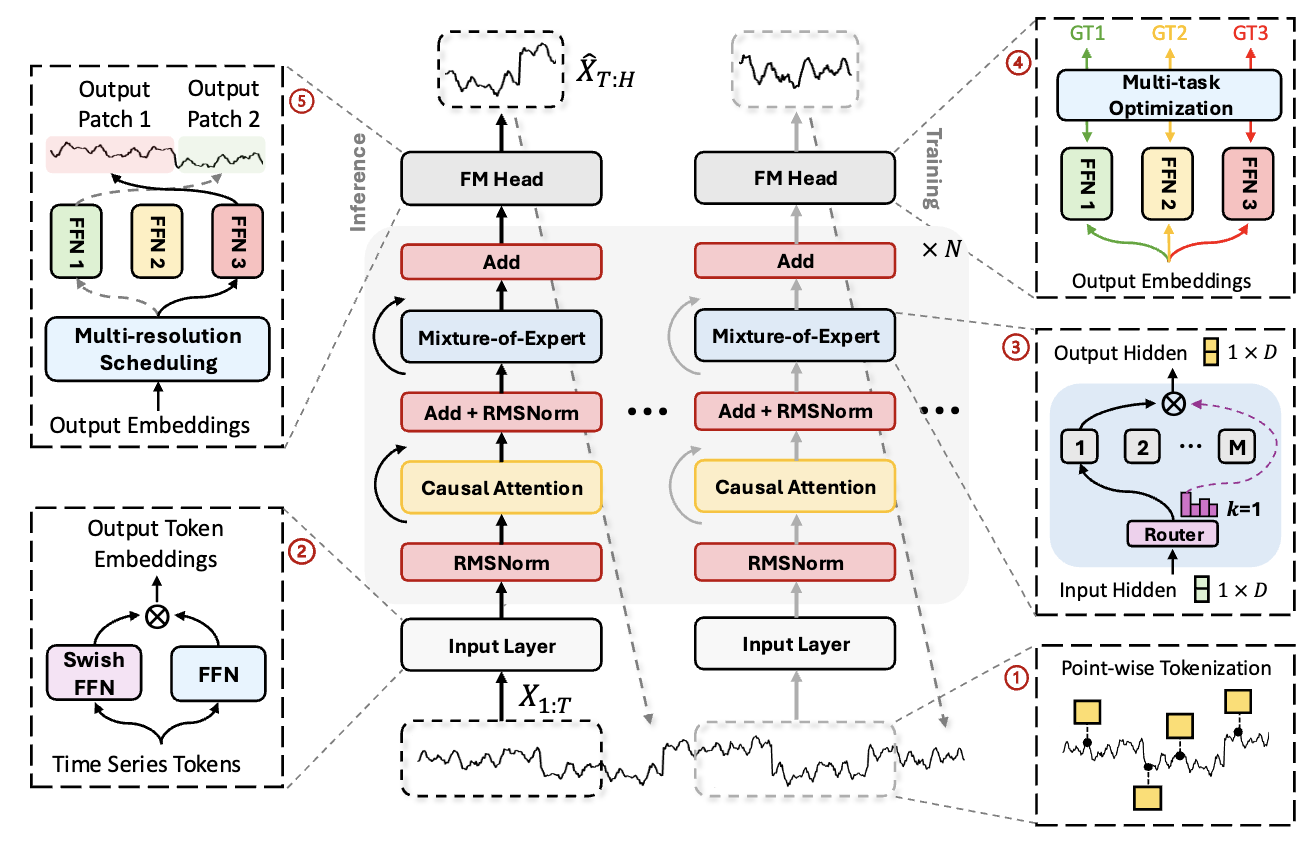

- [01/2025] Our paper Time-MoE: Billion-Scale Time Series Foundation Models with Mixture of Experts has been accepted by ICLR 2025 (CORE A*) as a Spotlight (Top 5%)

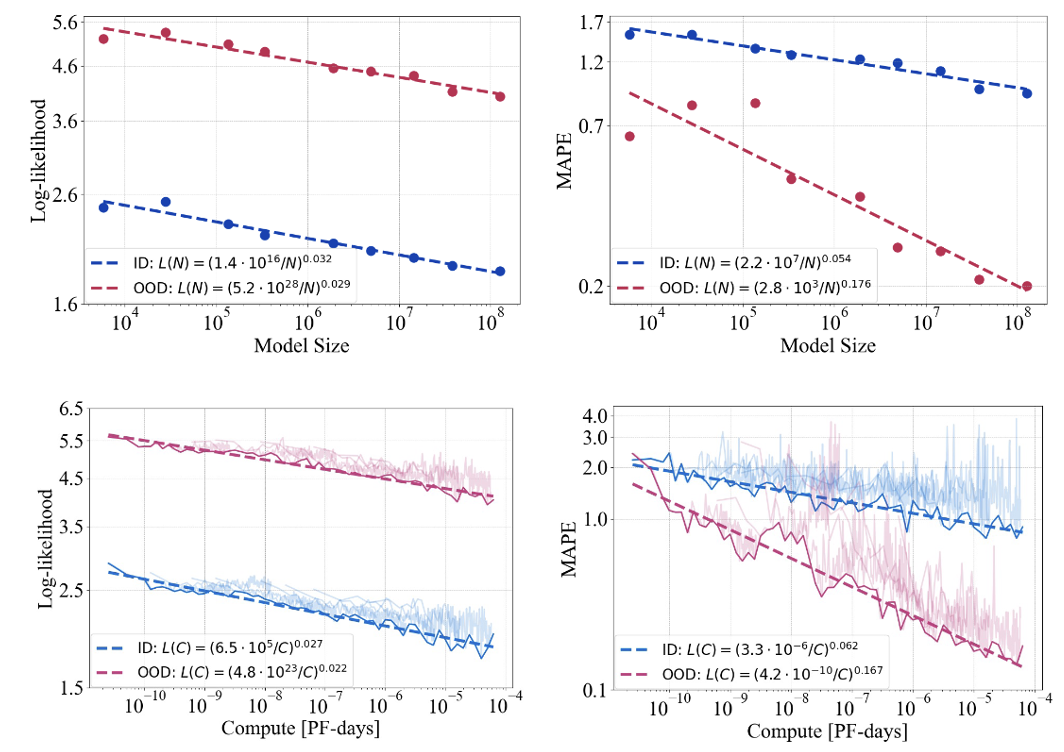

- [01/2025] Our paper Towards Neural Scaling Laws for Time Series Foundation Models has been accepted by ICLR 2025 (CORE A*)

Selected Publications [Full List] (^Co-first author; *Corresponding author)

|

TimeOmni-1: Incentivizing Complex Reasoning with Time Series in Large Language Models |

|

ShapeX: Shapelet-Driven Post Hoc Explanations for Time Series Classification Models |

|

T2S: High-resolution Time Series Generation with Text-to-Series Diffusion Models |

|

Time-MQA: Time Series Multi-Task Question Answering with Context Enhancement |

|

Time-MoE: Billion-Scale Time Series Foundation Models with Mixture of Experts |

|

TimeMixer++: A General Time Series Pattern Machine for Universal Predictive Analysis |

|

Towards Neural Scaling Laws for Time Series Foundation Models |

|

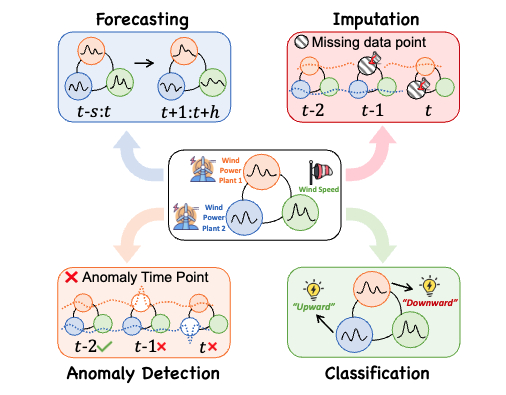

A Survey on Graph Neural Networks for Time Series: Forecasting,

Classification, Imputation, |

|

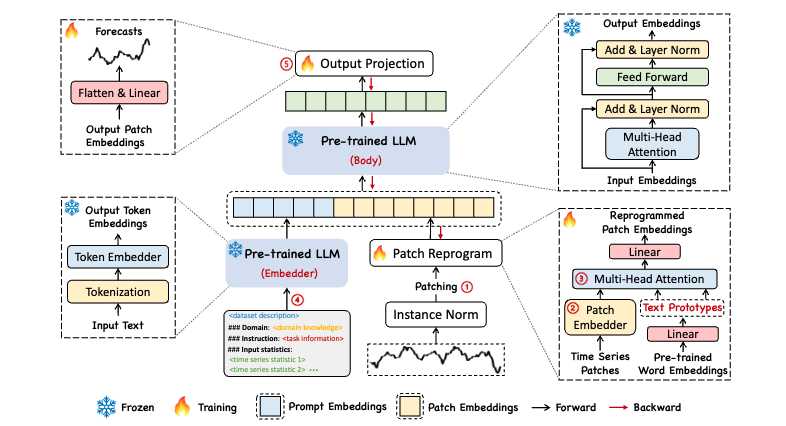

Time-LLM: Time Series Forecasting by Reprogramming Large Language Models |

|

Towards Expressive Spectral-Temporal Graph Neural Networks for Time Series Forecasting |

|

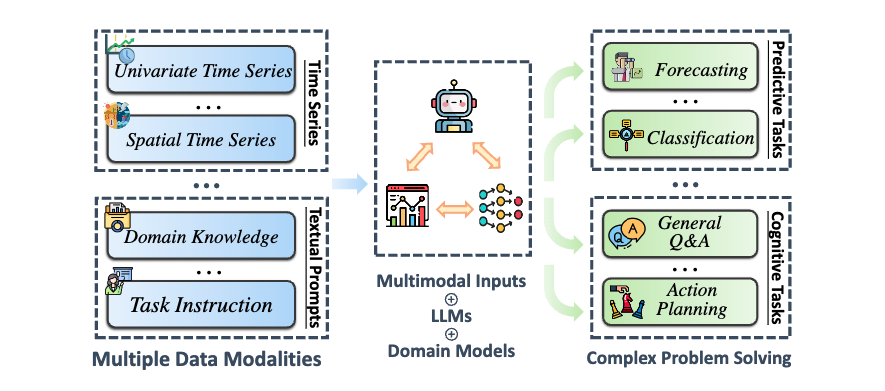

Position: What Can Large Language Models Tell Us about Time Series Analysis |

|

Large Models for Time Series and Spatio-Temporal Data: A Survey and Outlook |

|

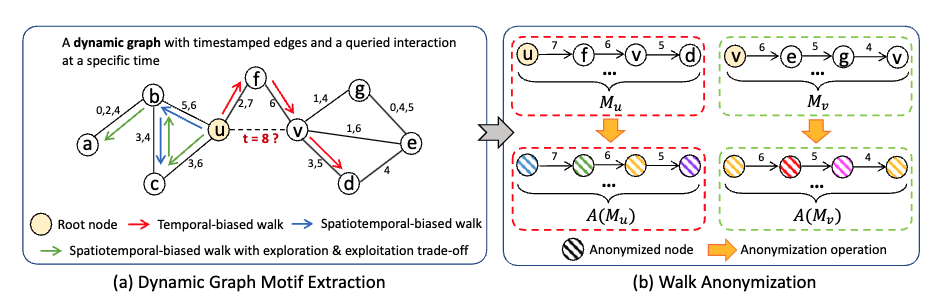

Neural Temporal Walks: Motif-Aware Representation Learning on Continuous-Time Dynamic Graphs |

|

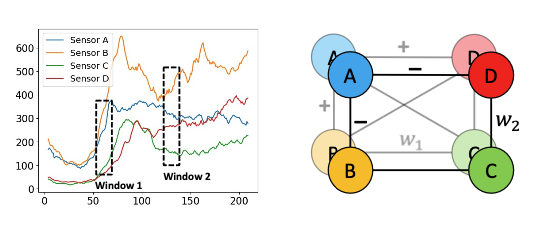

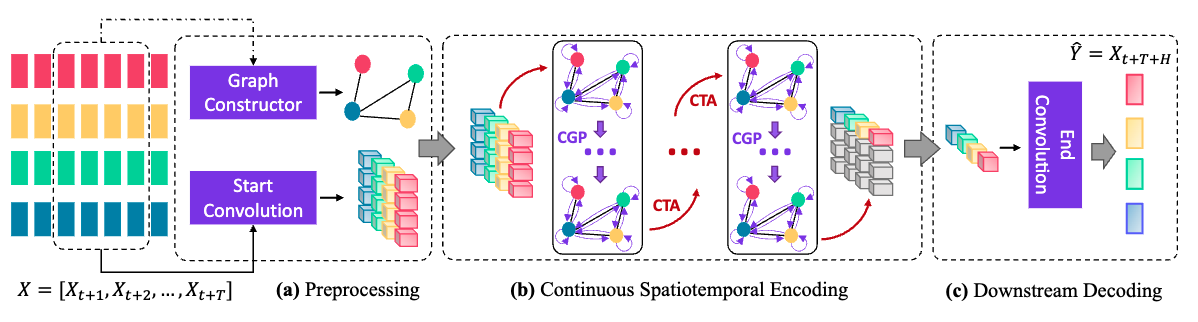

Multivariate Time Series Forecasting with Dynamic Graph Neural ODEs |

|

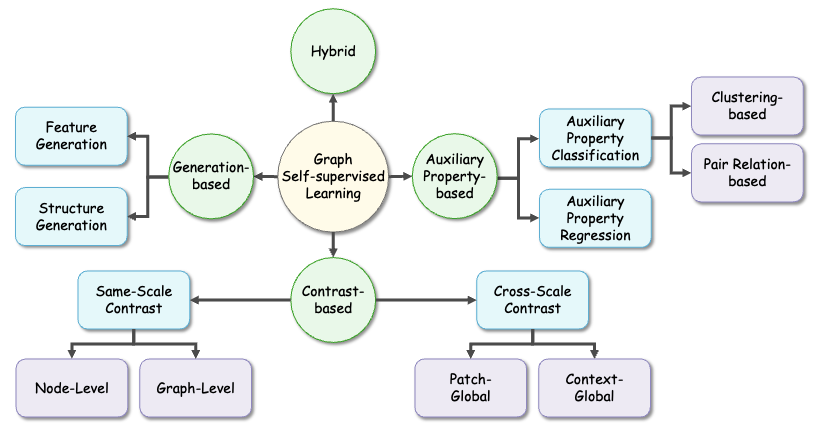

Graph Self-Supervised Learning: A Survey |

|

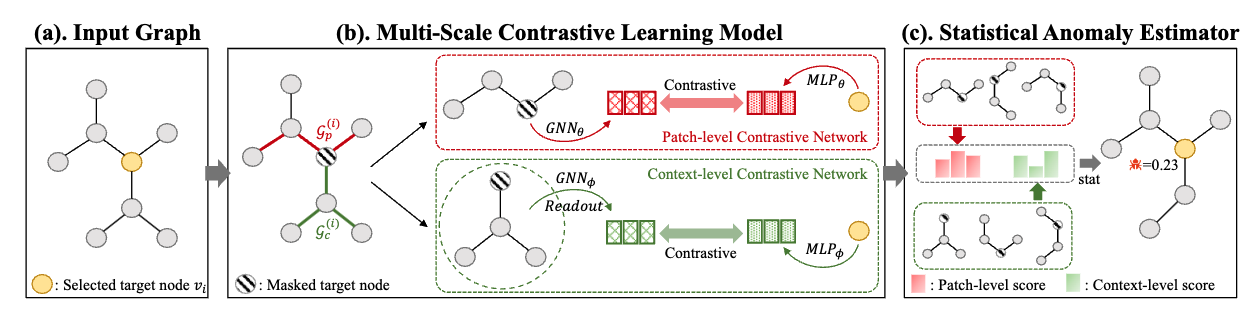

ANEMONE: Graph Anomaly Detection with Multi-Scale Contrastive Learning |

|

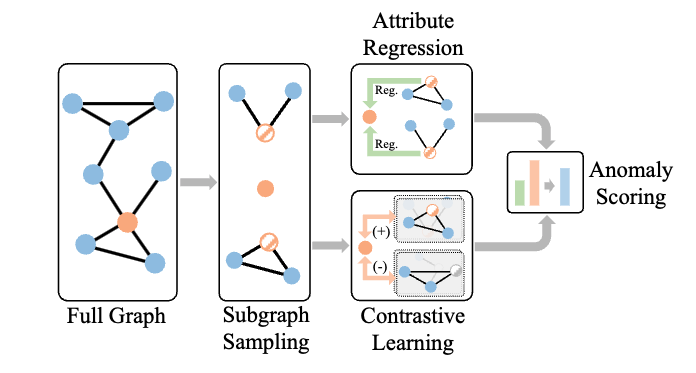

Generative and Contrastive Self-Supervised Learning for Graph Anomaly Detection |

|

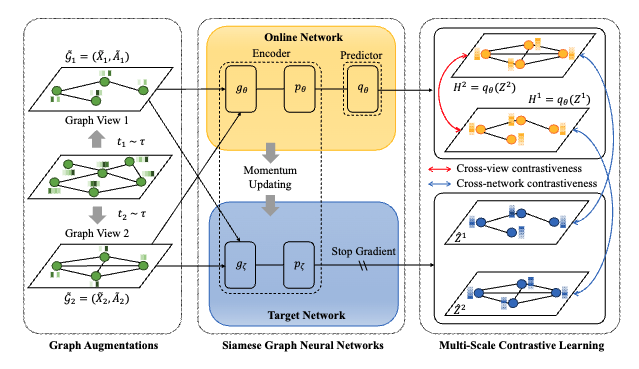

Multi-Scale Contrastive Siamese Networks for Self-Supervised Graph Representation Learning |

Selected Talks

- [01/2026] Keynote talk of "Time Series Foundation Models" (ver. 3) at AAAI 2026

- [11/2025] Keynote talk of "A Path Towards Time Series Intelligence" (ver. 1) at ACPR 2025

- [04/2025] Keynote talk of "Time Series Analysis in the Web: History, Preliminaries, and Challenges" at WWW 2025 [Slides]

- [02/2025] Keynote talk of "Time Series Foundation Models" (ver. 2) at AAAI 2025

- [10/2024] Invited talk of "Time Series Foundation Models" (ver. 1) at MLBoost [Video]

- [09/2024] Invited talk of "Graph Neural Networks for Time Series" at MLBoost [Video]

- [08/2024] Keynote talk of "Time Series Foundation Models" (ver. 1) at KDD 2024 [Slides]

- [05/2024] Invited talk of "What Can Large Language Models Tell Us About Time Series Analysis" at Salesforce [Slides]

- [04/2024] Invited talk of "Time Series Forecasting by Reprogramming Large Language Models" at "Talk on MLLM" [Slides][Video]

- [02/2024] Invited talk of "Time Series Forecasting by Reprogramming Large Language Models" at AI TIME [Slides] [Video]

- [10/2023] Invited talk of "Graph Neural Networks for Time Series" at TGL Workshop [Slides]

- [03/2023] Invited talk of "Learning on Continuous-Time Dynamic Graphs" at TGL Workshop [Slides] [Video]

- [02/2023] Invited talk of "Learning on Continuous-Time Dynamic Graphs" at AI TIME [Video]

Services

Conferences:

- Program Chair, The 2nd International Workshop on Spatio-Temporal Data Mining from the Web at The Web Conference 2026

- Program Chair, The Workshop on Rethinking Financial Time Series: Foundations, Frontiers, and Future Directions at ICAIF 2025

- Program Chair, The Workshop of Artificial Intelligence for Web-Centric Time Series Analysis (AI4TS): Theory, Algorithms, and Applications at The Web Conference 2025

- Program Chair, The International Workshop on Spatio-Temporal Data Mining from the Web at The Web Conference 2025

- Program Chair, The Workshop of Deep Learning for Graphs (DL4G) at IJCNN 2023

- Organizer, The Tutorial of Foundation Models for Time Series Analysis at AAAI 2026

- Organizer, The Tutorial of Foundation Models for Spatio-Temporal Data Science at KDD 2025

- Organizer, The Tutorial of Time Series Analysis in the Web: Recent Advances and Future Trends at The Web Conference 2025

- Organizer, The Tutorial of Foundation Models for Time Series (FM4TS) at AAAI 2025

- Organizer, The Tutorial of Foundation Models for Time Series (FM4TS) at KDD 2024

- Organizer, The Tutorial of Graph Self-Supervised Learning: Taxonomy, Frontiers, and Applications at IJCNN 2023

- Area Chair, ICASSP 2025, NeurIPS 2025, ICASSP 2026, ICLR 2026

- Handled hundreds submissions on top-tier AI/ML/DM conferences e.g., NeurIPS, ICLR, ICML, IJCAI, AAAI, etc.

Journals:

- Associate Editor (Main areas: time series & spatio-temporal data mining), Neurocomputing (Q1; IF: 6.5)

- Handled 40+ submissions on top IEEE Trans. (e.g., TPAMI, TNNLS, TKDE, TCYB, etc.) and high-impact journals (e.g., Nature Machine Intelligence & Nature Communications)

Others:

- Program Committee, IEEE Task Force on AI for Time Series and Spatio-Temporal Data

Teaching

- 2026 Trimester 1 3016ICT Secure Development Operations, Griffith University

- 2025 Trimester 2 2808ICT/7623ICT Secure Development Operations, Griffith University

- 2024 Trimester 3 1007ICT/7611ICT Computer Systems and Cyber Security, Griffith University

- 2024 Semester 1 FIT5212 Data Analysis for Semi-Structured Data, Monash University

- 2023 Semester 1 Spring FIT5212 Data Analysis for Semi-Structured Data, Monash University